1970s Article 1 — The First Personal Computers

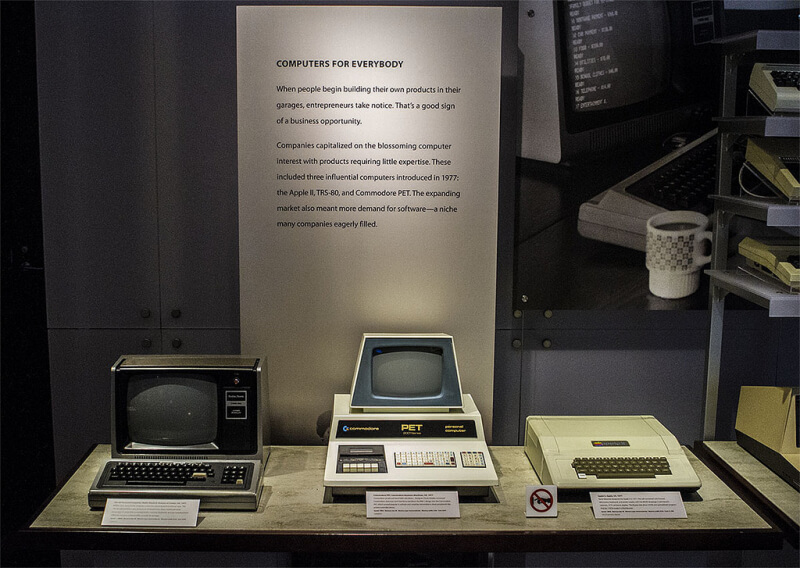

The "1977 Trinity:" Apple II, TRS-80 and Commodore PET.

Techspot.com

The 1970s set the stage for how modern computers would be—even up to this day.

Going into the 1970s, modern computing didn't have its foundation poured and set. Yes, the math of computers had been figured well over a century ago by that point, but that was all in theory and experimentation.

But the '70s started to change that. This decade was the time when actual infrastructure and standards that would persist to this day were materialized. Here are some of the outstanding innovations of the decade:

- 1971: Invention of the EEPROM

- The precursor to flash memory.

- Think USB flash drives.

- 1971: Invention of the Intel 4004 microprocessor, both the company's first processor and the first commercial one-chip CPU, called a microprocessor

- Intel is still a leading hardware company today.

- All modern CPUs are microprocessors.

- 1974: Invention of the MCU and SOC containing all needed computing elements on a single, cheaper chip.

- A model now dominant in smartphones and upcoming in traditional computers.

These innovations, and many more, spurred the computerization of the world.

1970s Article 2 — Setting Things in Stone

The 1970s were not limited to hardware advancements. Many software standards were also created and have survived to modern day.

- The C Programming Language

- Created in 1972.

- Still dominant in many low-level applications, such as Linux.

- Unix was created in 1969 by AT&T for Bell System's internal use.

- Unix has gone on to be the the base for the vast majority of modern-day operating systems including:

- GNU+Linux

- Android

- iOS

- macOS

- ...and basically everything else that isn't Windows.

- Email's conceptualization

- The terms "electronic mail" and "email" were coined in the years 1975 and 1979, respectively.

- Although the internet would not be realized for a couple more decades, the invention of these terms reflects the rising prospects of a future where computers would be in the hands of individuals.

Whether they were realized in the '70s or not, many ideas about what modern computing would look like were formulated in the decade.

1970s Article 3 — The 1970s' Legacy on Computing

An significantly high amount of standards introduced to computing during the 1970s replaced those of previous decades and have persisted to present day.

Floppy Disks

8-inch, 5¼-inch, and 3½-inch floppy disks.

George Chernilevsky, via Wikimedia Commons

Floppy disks were introduced to the market by IBM in 1972, which "sent into retirement the punched card, which had been a key to its success since its founding in 1911" (IBM). Although floppy disks would fall mostly out of use a few decades later, their legacy lives on.

Why the C: Drive?

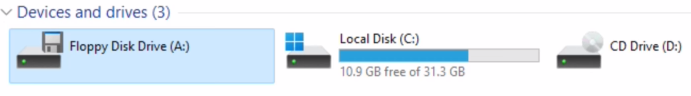

There are still reserved seats for floppy drives on Windows 11—the latest OS from Microsoft.

A screenshot of YouTube channel Mercia Solutions' video Windows 11 supports floppy discs.

If you, like me, have a computer running Windows, there's no doubt that you've discovered your hard-drive is called "C:," and any other storage device that you plug in after follows the nomenclature alphabetically. And if you, also like me, have wondered how geniuses over at Microsoft that invented such complex machines forgot the first two letter of the alphabet—maybe to their alpha- and beta-less brains, the Gammadelt—you would have the floppy disks to blame.

Or maybe it would be more correct to blame the floppy drives. After all, it was the standard inclusion of two of those that resulted in the reservation of "A:" and "B:" in operating systems. Since floppy drives were standard in computers before hard drives—you'd have a floppy disk of your OS for your computer to read every time it boots up—they got to be first.

A quick Google image search with the query of "save icon."

My own screenshot of Google.com.

And that made sense, but practically nobody uses floppy disks anymore. So what about floppy disks makes their legacy survive? The answer is likely something bigger.

The Y2K Bug

The Y2K bug causes computers to interpret all years as if they were in the 20th century. This display should say "2000."

Bug de l'an 2000 via Wikimedia Commons

Perhaps nothing more clearly illustrates the fact that old computer technology was being used past its expected lifespan more than the Millennium Bug, AKA Y2K. As computers became part of many implementations, such as electronic signage or banking calculations, many of them used the shortcut of years being two digits long. For example, the year "1974" would be stored in the computer as just "74." The computer would then add 1,900 years to whatever number it stored to get the year. The most likely reason for software being programmed this way is that the programmers didn't expect for their technologies to be kept around for so long.

Conclusion about the Decade

Even if it's now evident how the 1970s were pivotal in the history of computing, it's also evident that the people of the decade weren't fully aware of how large of a legacy they were leaving.